neural

-

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 10093

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 10093 -

Scientists Develop Wireless Networks that Allow Brain Circuits to Be Controlled Remotely through the Internet

Wireless implantable devices and IoT could manipulate the brains of animals from anywhere around the world due to their minimalistic hardware, low setup cost, ease of use, and customizable versatility

A new study shows that researchers can remotely control the brain circuits of numerous animals simultaneously and independently through the internet. The scientists believe this newly developed technology can speed up brain research and various neuroscience studies to uncover basic brain functions as well as the underpinnings of various neuropsychiatric and neurological disorders.

A multidisciplinary team of researchers at KAIST, Washington University in St. Louis, and the University of Colorado, Boulder, created a wireless ecosystem with its own wireless implantable devices and Internet of Things (IoT) infrastructure to enable high-throughput neuroscience experiments over the internet. This innovative technology could enable scientists to manipulate the brains of animals from anywhere around the world. The study was published in the journal Nature Biomedical Engineering on November 25

“This novel technology is highly versatile and adaptive. It can remotely control numerous neural implants and laboratory tools in real-time or in a scheduled way without direct human interactions,” said Professor Jae-Woong Jeong of the School of Electrical Engineering at KAIST and a senior author of the study. “These wireless neural devices and equipment integrated with IoT technology have enormous potential for science and medicine.”

The wireless ecosystem only requires a mini-computer that can be purchased for under $45, which connects to the internet and communicates with wireless multifunctional brain probes or other types of conventional laboratory equipment using IoT control modules. By optimally integrating the versatility and modular construction of both unique IoT hardware and software within a single ecosystem, this wireless technology offers new applications that have not been demonstrated before by a single standalone technology. This includes, but is not limited to minimalistic hardware, global remote access, selective and scheduled experiments, customizable automation, and high-throughput scalability.

“As long as researchers have internet access, they are able to trigger, customize, stop, validate, and store the outcomes of large experiments at any time and from anywhere in the world. They can remotely perform large-scale neuroscience experiments in animals deployed in multiple countries,” said one of the lead authors, Dr. Raza Qazi, a researcher with KAIST and the University of Colorado, Boulder. “The low cost of this system allows it to be easily adopted and can further fuel innovation across many laboratories,” Dr. Qazi added.

One of the significant advantages of this IoT neurotechnology is its ability to be mass deployed across the globe due to its minimalistic hardware, low setup cost, ease of use, and customizable versatility. Scientists across the world can quickly implement this technology within their existing laboratories with minimal budget concerns to achieve globally remote access, scalable experimental automation, or both, thus potentially reducing the time needed to unravel various neuroscientific challenges such as those associated with intractable neurological conditions.

Another senior author on the study, Professor Jordan McCall from the Department of Anesthesiology and Center for Clinical Pharmacology at Washington University in St. Louis, said this technology has the potential to change how basic neuroscience studies are performed. “One of the biggest limitations when trying to understand how the mammalian brain works is that we have to study these functions in unnatural conditions. This technology brings us one step closer to performing important studies without direct human interaction with the study subjects.”

The ability to remotely schedule experiments moves toward automating these types of experiments. Dr. Kyle Parker, an instructor at Washington University in St. Louis and another lead author on the study added, “This experimental automation can potentially help us reduce the number of animals used in biomedical research by reducing the variability introduced by various experimenters. This is especially important given our moral imperative to seek research designs that enable this reduction.”

The researchers believe this wireless technology may open new opportunities for many applications including brain research, pharmaceuticals, and telemedicine to treat diseases in the brain and other organs remotely. This remote automation technology could become even more valuable when many labs need to shut down, such as during the height of the COVID-19 pandemic.

This work was supported by grants from the KAIST Global Singularity Research Program, the National Research Foundation of Korea, the United States National Institute of Health, and Oak Ridge Associated Universities.

-PublicationRaza Qazi, Kyle Parker, Choong Yeon Kim, Jordan McCall, Jae-Woong Jeong et al. “Scalable and modular wireless-network infrastructure for large-scale behavioral neuroscience,” Nature Biomedical Engineering, November 25 2021 (doi.org/10.1038/s41551-021-00814-w)

-ProfileProfessor Jae-Woong JeongBio-Integrated Electronics and Systems LabSchool of Electrical EngineeringKAIST

2021.11.29 View 18148

Scientists Develop Wireless Networks that Allow Brain Circuits to Be Controlled Remotely through the Internet

Wireless implantable devices and IoT could manipulate the brains of animals from anywhere around the world due to their minimalistic hardware, low setup cost, ease of use, and customizable versatility

A new study shows that researchers can remotely control the brain circuits of numerous animals simultaneously and independently through the internet. The scientists believe this newly developed technology can speed up brain research and various neuroscience studies to uncover basic brain functions as well as the underpinnings of various neuropsychiatric and neurological disorders.

A multidisciplinary team of researchers at KAIST, Washington University in St. Louis, and the University of Colorado, Boulder, created a wireless ecosystem with its own wireless implantable devices and Internet of Things (IoT) infrastructure to enable high-throughput neuroscience experiments over the internet. This innovative technology could enable scientists to manipulate the brains of animals from anywhere around the world. The study was published in the journal Nature Biomedical Engineering on November 25

“This novel technology is highly versatile and adaptive. It can remotely control numerous neural implants and laboratory tools in real-time or in a scheduled way without direct human interactions,” said Professor Jae-Woong Jeong of the School of Electrical Engineering at KAIST and a senior author of the study. “These wireless neural devices and equipment integrated with IoT technology have enormous potential for science and medicine.”

The wireless ecosystem only requires a mini-computer that can be purchased for under $45, which connects to the internet and communicates with wireless multifunctional brain probes or other types of conventional laboratory equipment using IoT control modules. By optimally integrating the versatility and modular construction of both unique IoT hardware and software within a single ecosystem, this wireless technology offers new applications that have not been demonstrated before by a single standalone technology. This includes, but is not limited to minimalistic hardware, global remote access, selective and scheduled experiments, customizable automation, and high-throughput scalability.

“As long as researchers have internet access, they are able to trigger, customize, stop, validate, and store the outcomes of large experiments at any time and from anywhere in the world. They can remotely perform large-scale neuroscience experiments in animals deployed in multiple countries,” said one of the lead authors, Dr. Raza Qazi, a researcher with KAIST and the University of Colorado, Boulder. “The low cost of this system allows it to be easily adopted and can further fuel innovation across many laboratories,” Dr. Qazi added.

One of the significant advantages of this IoT neurotechnology is its ability to be mass deployed across the globe due to its minimalistic hardware, low setup cost, ease of use, and customizable versatility. Scientists across the world can quickly implement this technology within their existing laboratories with minimal budget concerns to achieve globally remote access, scalable experimental automation, or both, thus potentially reducing the time needed to unravel various neuroscientific challenges such as those associated with intractable neurological conditions.

Another senior author on the study, Professor Jordan McCall from the Department of Anesthesiology and Center for Clinical Pharmacology at Washington University in St. Louis, said this technology has the potential to change how basic neuroscience studies are performed. “One of the biggest limitations when trying to understand how the mammalian brain works is that we have to study these functions in unnatural conditions. This technology brings us one step closer to performing important studies without direct human interaction with the study subjects.”

The ability to remotely schedule experiments moves toward automating these types of experiments. Dr. Kyle Parker, an instructor at Washington University in St. Louis and another lead author on the study added, “This experimental automation can potentially help us reduce the number of animals used in biomedical research by reducing the variability introduced by various experimenters. This is especially important given our moral imperative to seek research designs that enable this reduction.”

The researchers believe this wireless technology may open new opportunities for many applications including brain research, pharmaceuticals, and telemedicine to treat diseases in the brain and other organs remotely. This remote automation technology could become even more valuable when many labs need to shut down, such as during the height of the COVID-19 pandemic.

This work was supported by grants from the KAIST Global Singularity Research Program, the National Research Foundation of Korea, the United States National Institute of Health, and Oak Ridge Associated Universities.

-PublicationRaza Qazi, Kyle Parker, Choong Yeon Kim, Jordan McCall, Jae-Woong Jeong et al. “Scalable and modular wireless-network infrastructure for large-scale behavioral neuroscience,” Nature Biomedical Engineering, November 25 2021 (doi.org/10.1038/s41551-021-00814-w)

-ProfileProfessor Jae-Woong JeongBio-Integrated Electronics and Systems LabSchool of Electrical EngineeringKAIST

2021.11.29 View 18148 -

A Mechanism Underlying Most Common Cause of Epileptic Seizures Revealed

An interdisciplinary study shows that neurons carrying somatic mutations in MTOR can lead to focal epileptogenesis via non-cell-autonomous hyperexcitability of nearby nonmutated neurons

During fetal development, cells should migrate to the outer edge of the brain to form critical connections for information transfer and regulation in the body. When even a few cells fail to move to the correct location, the neurons become disorganized and this results in focal cortical dysplasia. This condition is the most common cause of seizures that cannot be controlled with medication in children and the second most common cause in adults.

Now, an interdisciplinary team studying neurogenetics, neural networks, and neurophysiology at KAIST has revealed how dysfunctions in even a small percentage of cells can cause disorder across the entire brain. They published their results on June 28 in Annals of Neurology.

The work builds on a previous finding, also by a KAIST scientists, who found that focal cortical dysplasia was caused by mutations in the cells involved in mTOR, a pathway that regulates signaling between neurons in the brain.

“Only 1 to 2% of neurons carrying mutations in the mTOR signaling pathway that regulates cell signaling in the brain have been found to include seizures in animal models of focal cortical dysplasia,” said Professor Jong-Woo Sohn from the Department of Biological Sciences. “The main challenge of this study was to explain how nearby non-mutated neurons are hyperexcitable.”

Initially, the researchers hypothesized that the mutated cells affected the number of excitatory and inhibitory synapses in all neurons, mutated or not. These neural gates can trigger or halt activity, respectively, in other neurons. Seizures are a result of extreme activity, called hyperexcitability. If the mutated cells upend the balance and result in more excitatory cells, the researchers thought, it made sense that the cells would be more susceptible to hyperexcitability and, as a result, seizures.

“Contrary to our expectations, the synaptic input balance was not changed in either the mutated or non-mutated neurons,” said Professor Jeong Ho Lee from the Graduate School of Medical Science and Engineering. “We turned our attention to a protein overproduced by mutated neurons.”

The protein is adenosine kinase, which lowers the concentration of adenosine. This naturally occurring compound is an anticonvulsant and works to relax vessels. In mice engineered to have focal cortical dysplasia, the researchers injected adenosine to replace the levels lowered by the protein. It worked and the neurons became less excitable.

“We demonstrated that augmentation of adenosine signaling could attenuate the excitability of non-mutated neurons,” said Professor Se-Bum Paik from the Department of Bio and Brain Engineering.

The effect on the non-mutated neurons was the surprising part, according to Paik. “The seizure-triggering hyperexcitability originated not in the mutation-carrying neurons, but instead in the nearby non-mutated neurons,” he said.

The mutated neurons excreted more adenosine kinase, reducing the adenosine levels in the local environment of all the cells. With less adenosine, the non-mutated neurons became hyperexcitable, leading to seizures.

“While we need further investigate into the relationship between the concentration of adenosine and the increased excitation of nearby neurons, our results support the medical use of drugs to activate adenosine signaling as a possible treatment pathway for focal cortical dysplasia,” Professor Lee said.

The Suh Kyungbae Foundation, the Korea Health Technology Research and Development Project, the Ministry of Health & Welfare, and the National Research Foundation in Korea funded this work.

-Publication:Koh, H.Y., Jang, J., Ju, S.H., Kim, R., Cho, G.-B., Kim, D.S., Sohn, J.-W., Paik, S.-B. and Lee, J.H. (2021), ‘Non–Cell Autonomous Epileptogenesis in Focal Cortical Dysplasia’ Annals of Neurology, 90: 285 299. (https://doi.org/10.1002/ana.26149)

-ProfileProfessor Jeong Ho Lee Translational Neurogenetics Labhttps://tnl.kaist.ac.kr/ Graduate School of Medical Science and Engineering KAIST

Professor Se-Bum Paik Visual System and Neural Network Laboratory http://vs.kaist.ac.kr/ Department of Bio and Brain EngineeringKAIST

Professor Jong-Woo Sohn Laboratory for Neurophysiology, https://sites.google.com/site/sohnlab2014/home Department of Biological SciencesKAIST

Dr. Hyun Yong Koh Translational Neurogenetics LabGraduate School of Medical Science and EngineeringKAIST

Dr. Jaeson Jang Ph.D.Visual System and Neural Network LaboratoryDepartment of Bio and Brain Engineering KAIST

Sang Hyeon Ju M.D.Laboratory for NeurophysiologyDepartment of Biological SciencesKAIST

2021.08.26 View 15863

A Mechanism Underlying Most Common Cause of Epileptic Seizures Revealed

An interdisciplinary study shows that neurons carrying somatic mutations in MTOR can lead to focal epileptogenesis via non-cell-autonomous hyperexcitability of nearby nonmutated neurons

During fetal development, cells should migrate to the outer edge of the brain to form critical connections for information transfer and regulation in the body. When even a few cells fail to move to the correct location, the neurons become disorganized and this results in focal cortical dysplasia. This condition is the most common cause of seizures that cannot be controlled with medication in children and the second most common cause in adults.

Now, an interdisciplinary team studying neurogenetics, neural networks, and neurophysiology at KAIST has revealed how dysfunctions in even a small percentage of cells can cause disorder across the entire brain. They published their results on June 28 in Annals of Neurology.

The work builds on a previous finding, also by a KAIST scientists, who found that focal cortical dysplasia was caused by mutations in the cells involved in mTOR, a pathway that regulates signaling between neurons in the brain.

“Only 1 to 2% of neurons carrying mutations in the mTOR signaling pathway that regulates cell signaling in the brain have been found to include seizures in animal models of focal cortical dysplasia,” said Professor Jong-Woo Sohn from the Department of Biological Sciences. “The main challenge of this study was to explain how nearby non-mutated neurons are hyperexcitable.”

Initially, the researchers hypothesized that the mutated cells affected the number of excitatory and inhibitory synapses in all neurons, mutated or not. These neural gates can trigger or halt activity, respectively, in other neurons. Seizures are a result of extreme activity, called hyperexcitability. If the mutated cells upend the balance and result in more excitatory cells, the researchers thought, it made sense that the cells would be more susceptible to hyperexcitability and, as a result, seizures.

“Contrary to our expectations, the synaptic input balance was not changed in either the mutated or non-mutated neurons,” said Professor Jeong Ho Lee from the Graduate School of Medical Science and Engineering. “We turned our attention to a protein overproduced by mutated neurons.”

The protein is adenosine kinase, which lowers the concentration of adenosine. This naturally occurring compound is an anticonvulsant and works to relax vessels. In mice engineered to have focal cortical dysplasia, the researchers injected adenosine to replace the levels lowered by the protein. It worked and the neurons became less excitable.

“We demonstrated that augmentation of adenosine signaling could attenuate the excitability of non-mutated neurons,” said Professor Se-Bum Paik from the Department of Bio and Brain Engineering.

The effect on the non-mutated neurons was the surprising part, according to Paik. “The seizure-triggering hyperexcitability originated not in the mutation-carrying neurons, but instead in the nearby non-mutated neurons,” he said.

The mutated neurons excreted more adenosine kinase, reducing the adenosine levels in the local environment of all the cells. With less adenosine, the non-mutated neurons became hyperexcitable, leading to seizures.

“While we need further investigate into the relationship between the concentration of adenosine and the increased excitation of nearby neurons, our results support the medical use of drugs to activate adenosine signaling as a possible treatment pathway for focal cortical dysplasia,” Professor Lee said.

The Suh Kyungbae Foundation, the Korea Health Technology Research and Development Project, the Ministry of Health & Welfare, and the National Research Foundation in Korea funded this work.

-Publication:Koh, H.Y., Jang, J., Ju, S.H., Kim, R., Cho, G.-B., Kim, D.S., Sohn, J.-W., Paik, S.-B. and Lee, J.H. (2021), ‘Non–Cell Autonomous Epileptogenesis in Focal Cortical Dysplasia’ Annals of Neurology, 90: 285 299. (https://doi.org/10.1002/ana.26149)

-ProfileProfessor Jeong Ho Lee Translational Neurogenetics Labhttps://tnl.kaist.ac.kr/ Graduate School of Medical Science and Engineering KAIST

Professor Se-Bum Paik Visual System and Neural Network Laboratory http://vs.kaist.ac.kr/ Department of Bio and Brain EngineeringKAIST

Professor Jong-Woo Sohn Laboratory for Neurophysiology, https://sites.google.com/site/sohnlab2014/home Department of Biological SciencesKAIST

Dr. Hyun Yong Koh Translational Neurogenetics LabGraduate School of Medical Science and EngineeringKAIST

Dr. Jaeson Jang Ph.D.Visual System and Neural Network LaboratoryDepartment of Bio and Brain Engineering KAIST

Sang Hyeon Ju M.D.Laboratory for NeurophysiologyDepartment of Biological SciencesKAIST

2021.08.26 View 15863 -

Hydrogel-Based Flexible Brain-Machine Interface

The interface is easy to insert into the body when dry, but behaves ‘stealthily’ inside the brain when wet

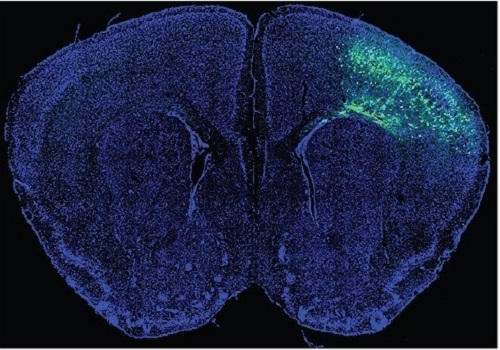

Professor Seongjun Park’s research team and collaborators revealed a newly developed hydrogel-based flexible brain-machine interface. To study the structure of the brain or to identify and treat neurological diseases, it is crucial to develop an interface that can stimulate the brain and detect its signals in real time. However, existing neural interfaces are mechanically and chemically different from real brain tissue. This causes foreign body response and forms an insulating layer (glial scar) around the interface, which shortens its lifespan.

To solve this problem, the research team developed a ‘brain-mimicking interface’ by inserting a custom-made multifunctional fiber bundle into the hydrogel body. The device is composed not only of an optical fiber that controls specific nerve cells with light in order to perform optogenetic procedures, but it also has an electrode bundle to read brain signals and a microfluidic channel to deliver drugs to the brain.

The interface is easy to insert into the body when dry, as hydrogels become solid. But once in the body, the hydrogel will quickly absorb body fluids and resemble the properties of its surrounding tissues, thereby minimizing foreign body response.

The research team applied the device on animal models, and showed that it was possible to detect neural signals for up to six months, which is far beyond what had been previously recorded. It was also possible to conduct long-term optogenetic and behavioral experiments on freely moving mice with a significant reduction in foreign body responses such as glial and immunological activation compared to existing devices.

“This research is significant in that it was the first to utilize a hydrogel as part of a multifunctional neural interface probe, which increased its lifespan dramatically,” said Professor Park. “With our discovery, we look forward to advancements in research on neurological disorders like Alzheimer’s or Parkinson’s disease that require long-term observation.”

The research was published in Nature Communications on June 8, 2021. (Title: Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity) The study was conducted jointly with an MIT research team composed of Professor Polina Anikeeva, Professor Xuanhe Zhao, and Dr. Hyunwoo Yook.

This research was supported by the National Research Foundation (NRF) grant for emerging research, Korea Medical Device Development Fund, KK-JRC Smart Project, KAIST Global Initiative Program, and Post-AI Project.

-PublicationPark, S., Yuk, H., Zhao, R. et al. Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity. Nat Commun 12, 3435 (2021). https://doi.org/10.1038/s41467-021-23802-9

-ProfileProfessor Seongjun ParkBio and Neural Interfaces LaboratoryDepartment of Bio and Brain EngineeringKAIST

2021.07.13 View 13805

Hydrogel-Based Flexible Brain-Machine Interface

The interface is easy to insert into the body when dry, but behaves ‘stealthily’ inside the brain when wet

Professor Seongjun Park’s research team and collaborators revealed a newly developed hydrogel-based flexible brain-machine interface. To study the structure of the brain or to identify and treat neurological diseases, it is crucial to develop an interface that can stimulate the brain and detect its signals in real time. However, existing neural interfaces are mechanically and chemically different from real brain tissue. This causes foreign body response and forms an insulating layer (glial scar) around the interface, which shortens its lifespan.

To solve this problem, the research team developed a ‘brain-mimicking interface’ by inserting a custom-made multifunctional fiber bundle into the hydrogel body. The device is composed not only of an optical fiber that controls specific nerve cells with light in order to perform optogenetic procedures, but it also has an electrode bundle to read brain signals and a microfluidic channel to deliver drugs to the brain.

The interface is easy to insert into the body when dry, as hydrogels become solid. But once in the body, the hydrogel will quickly absorb body fluids and resemble the properties of its surrounding tissues, thereby minimizing foreign body response.

The research team applied the device on animal models, and showed that it was possible to detect neural signals for up to six months, which is far beyond what had been previously recorded. It was also possible to conduct long-term optogenetic and behavioral experiments on freely moving mice with a significant reduction in foreign body responses such as glial and immunological activation compared to existing devices.

“This research is significant in that it was the first to utilize a hydrogel as part of a multifunctional neural interface probe, which increased its lifespan dramatically,” said Professor Park. “With our discovery, we look forward to advancements in research on neurological disorders like Alzheimer’s or Parkinson’s disease that require long-term observation.”

The research was published in Nature Communications on June 8, 2021. (Title: Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity) The study was conducted jointly with an MIT research team composed of Professor Polina Anikeeva, Professor Xuanhe Zhao, and Dr. Hyunwoo Yook.

This research was supported by the National Research Foundation (NRF) grant for emerging research, Korea Medical Device Development Fund, KK-JRC Smart Project, KAIST Global Initiative Program, and Post-AI Project.

-PublicationPark, S., Yuk, H., Zhao, R. et al. Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity. Nat Commun 12, 3435 (2021). https://doi.org/10.1038/s41467-021-23802-9

-ProfileProfessor Seongjun ParkBio and Neural Interfaces LaboratoryDepartment of Bio and Brain EngineeringKAIST

2021.07.13 View 13805 -

What Guides Habitual Seeking Behavior Explained

A new role of the ventral striatum explains habitual seeking behavior

Researchers have been investigating how the brain controls habitual seeking behaviors such as addiction. A recent study by Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering revealed that a long-term value memory maintained in the ventral striatum in the brain is a neural basis of our habitual seeking behavior. This research was conducted in collaboration with the research team lead by Professor Hyoung F. Kim from Seoul National University. Given that addictive behavior is deemed a habitual one, this research provides new insights for developing therapeutic interventions for addiction.

Habitual seeking behavior involves strong stimulus responses, mostly rapid and automatic ones. The ventral striatum in the brain has been thought to be important for value learning and addictive behaviors. However, it was unclear if the ventral striatum processes and retains long-term memories that guide habitual seeking.

Professor Lee’s team reported a new role of the human ventral striatum where long-term memory of high-valued objects are retained as a single representation and may be used to evaluate visual stimuli automatically to guide habitual behavior.

“Our findings propose a role of the ventral striatum as a director that guides habitual behavior with the script of value information written in the past,” said Professor Lee.

The research team investigated whether learned values were retained in the ventral striatum while the subjects passively viewed previously learned objects in the absence of any immediate outcome. Neural responses in the ventral striatum during the incidental perception of learned objects were examined using fMRI and single-unit recording.

The study found significant value discrimination responses in the ventral striatum after learning and a retention period of several days. Moreover, the similarity of neural representations for good objects increased after learning, an outcome positively correlated with the habitual seeking response for good objects.

“These findings suggest that the ventral striatum plays a role in automatic evaluations of objects based on the neural representation of positive values retained since learning, to guide habitual seeking behaviors,” explained Professor Lee.

“We will fully investigate the function of different parts of the entire basal ganglia including the ventral striatum. We also expect that this understanding may lead to the development of better treatment for mental illnesses related to habitual behaviors or addiction problems.”

This study, supported by the National Research Foundation of Korea, was reported at Nature Communications (https://doi.org/10.1038/s41467-021-22335-5.)

-ProfileProfessor Sue-Hyun LeeDepartment of Bio and Brain EngineeringMemory and Cognition Laboratoryhttp://memory.kaist.ac.kr/lecture

KAIST

2021.06.03 View 12372

What Guides Habitual Seeking Behavior Explained

A new role of the ventral striatum explains habitual seeking behavior

Researchers have been investigating how the brain controls habitual seeking behaviors such as addiction. A recent study by Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering revealed that a long-term value memory maintained in the ventral striatum in the brain is a neural basis of our habitual seeking behavior. This research was conducted in collaboration with the research team lead by Professor Hyoung F. Kim from Seoul National University. Given that addictive behavior is deemed a habitual one, this research provides new insights for developing therapeutic interventions for addiction.

Habitual seeking behavior involves strong stimulus responses, mostly rapid and automatic ones. The ventral striatum in the brain has been thought to be important for value learning and addictive behaviors. However, it was unclear if the ventral striatum processes and retains long-term memories that guide habitual seeking.

Professor Lee’s team reported a new role of the human ventral striatum where long-term memory of high-valued objects are retained as a single representation and may be used to evaluate visual stimuli automatically to guide habitual behavior.

“Our findings propose a role of the ventral striatum as a director that guides habitual behavior with the script of value information written in the past,” said Professor Lee.

The research team investigated whether learned values were retained in the ventral striatum while the subjects passively viewed previously learned objects in the absence of any immediate outcome. Neural responses in the ventral striatum during the incidental perception of learned objects were examined using fMRI and single-unit recording.

The study found significant value discrimination responses in the ventral striatum after learning and a retention period of several days. Moreover, the similarity of neural representations for good objects increased after learning, an outcome positively correlated with the habitual seeking response for good objects.

“These findings suggest that the ventral striatum plays a role in automatic evaluations of objects based on the neural representation of positive values retained since learning, to guide habitual seeking behaviors,” explained Professor Lee.

“We will fully investigate the function of different parts of the entire basal ganglia including the ventral striatum. We also expect that this understanding may lead to the development of better treatment for mental illnesses related to habitual behaviors or addiction problems.”

This study, supported by the National Research Foundation of Korea, was reported at Nature Communications (https://doi.org/10.1038/s41467-021-22335-5.)

-ProfileProfessor Sue-Hyun LeeDepartment of Bio and Brain EngineeringMemory and Cognition Laboratoryhttp://memory.kaist.ac.kr/lecture

KAIST

2021.06.03 View 12372 -

Wirelessly Rechargeable Soft Brain Implant Controls Brain Cells

Researchers have invented a smartphone-controlled soft brain implant that can be recharged wirelessly from outside the body. It enables long-term neural circuit manipulation without the need for periodic disruptive surgeries to replace the battery of the implant. Scientists believe this technology can help uncover and treat psychiatric disorders and neurodegenerative diseases such as addiction, depression, and Parkinson’s.

A group of KAIST researchers and collaborators have engineered a tiny brain implant that can be wirelessly recharged from outside the body to control brain circuits for long periods of time without battery replacement. The device is constructed of ultra-soft and bio-compliant polymers to help provide long-term compatibility with tissue. Geared with micrometer-sized LEDs (equivalent to the size of a grain of salt) mounted on ultrathin probes (the thickness of a human hair), it can wirelessly manipulate target neurons in the deep brain using light.

This study, led by Professor Jae-Woong Jeong, is a step forward from the wireless head-mounted implant neural device he developed in 2019. That previous version could indefinitely deliver multiple drugs and light stimulation treatment wirelessly by using a smartphone. For more, Manipulating Brain Cells by Smartphone.

For the new upgraded version, the research team came up with a fully implantable, soft optoelectronic system that can be remotely and selectively controlled by a smartphone. This research was published on January 22, 2021 in Nature Communications.

The new wireless charging technology addresses the limitations of current brain implants. Wireless implantable device technologies have recently become popular as alternatives to conventional tethered implants, because they help minimize stress and inflammation in freely-moving animals during brain studies, which in turn enhance the lifetime of the devices. However, such devices require either intermittent surgeries to replace discharged batteries, or special and bulky wireless power setups, which limit experimental options as well as the scalability of animal experiments.

“This powerful device eliminates the need for additional painful surgeries to replace an exhausted battery in the implant, allowing seamless chronic neuromodulation,” said Professor Jeong. “We believe that the same basic technology can be applied to various types of implants, including deep brain stimulators, and cardiac and gastric pacemakers, to reduce the burden on patients for long-term use within the body.”

To enable wireless battery charging and controls, researchers developed a tiny circuit that integrates a wireless energy harvester with a coil antenna and a Bluetooth low-energy chip. An alternating magnetic field can harmlessly penetrate through tissue, and generate electricity inside the device to charge the battery. Then the battery-powered Bluetooth implant delivers programmable patterns of light to brain cells using an “easy-to-use” smartphone app for real-time brain control.

“This device can be operated anywhere and anytime to manipulate neural circuits, which makes it a highly versatile tool for investigating brain functions,” said lead author Choong Yeon Kim, a researcher at KAIST.

Neuroscientists successfully tested these implants in rats and demonstrated their ability to suppress cocaine-induced behaviour after the rats were injected with cocaine. This was achieved by precise light stimulation of relevant target neurons in their brains using the smartphone-controlled LEDs. Furthermore, the battery in the implants could be repeatedly recharged while the rats were behaving freely, thus minimizing any physical interruption to the experiments.

“Wireless battery re-charging makes experimental procedures much less complicated,” said the co-lead author Min Jeong Ku, a researcher at Yonsei University’s College of Medicine.

“The fact that we can control a specific behaviour of animals, by delivering light stimulation into the brain just with a simple manipulation of smartphone app, watching freely moving animals nearby, is very interesting and stimulates a lot of imagination,” said Jeong-Hoon Kim, a professor of physiology at Yonsei University’s College of Medicine. “This technology will facilitate various avenues of brain research.”

The researchers believe this brain implant technology may lead to new opportunities for brain research and therapeutic intervention to treat diseases in the brain and other organs.

This work was supported by grants from the National Research Foundation of Korea and the KAIST Global Singularity Research Program.

-Profile

Professor Jae-Woong Jeong

https://www.jeongresearch.org/

School of Electrical Engineering

KAIST

2021.01.26 View 28290

Wirelessly Rechargeable Soft Brain Implant Controls Brain Cells

Researchers have invented a smartphone-controlled soft brain implant that can be recharged wirelessly from outside the body. It enables long-term neural circuit manipulation without the need for periodic disruptive surgeries to replace the battery of the implant. Scientists believe this technology can help uncover and treat psychiatric disorders and neurodegenerative diseases such as addiction, depression, and Parkinson’s.

A group of KAIST researchers and collaborators have engineered a tiny brain implant that can be wirelessly recharged from outside the body to control brain circuits for long periods of time without battery replacement. The device is constructed of ultra-soft and bio-compliant polymers to help provide long-term compatibility with tissue. Geared with micrometer-sized LEDs (equivalent to the size of a grain of salt) mounted on ultrathin probes (the thickness of a human hair), it can wirelessly manipulate target neurons in the deep brain using light.

This study, led by Professor Jae-Woong Jeong, is a step forward from the wireless head-mounted implant neural device he developed in 2019. That previous version could indefinitely deliver multiple drugs and light stimulation treatment wirelessly by using a smartphone. For more, Manipulating Brain Cells by Smartphone.

For the new upgraded version, the research team came up with a fully implantable, soft optoelectronic system that can be remotely and selectively controlled by a smartphone. This research was published on January 22, 2021 in Nature Communications.

The new wireless charging technology addresses the limitations of current brain implants. Wireless implantable device technologies have recently become popular as alternatives to conventional tethered implants, because they help minimize stress and inflammation in freely-moving animals during brain studies, which in turn enhance the lifetime of the devices. However, such devices require either intermittent surgeries to replace discharged batteries, or special and bulky wireless power setups, which limit experimental options as well as the scalability of animal experiments.

“This powerful device eliminates the need for additional painful surgeries to replace an exhausted battery in the implant, allowing seamless chronic neuromodulation,” said Professor Jeong. “We believe that the same basic technology can be applied to various types of implants, including deep brain stimulators, and cardiac and gastric pacemakers, to reduce the burden on patients for long-term use within the body.”

To enable wireless battery charging and controls, researchers developed a tiny circuit that integrates a wireless energy harvester with a coil antenna and a Bluetooth low-energy chip. An alternating magnetic field can harmlessly penetrate through tissue, and generate electricity inside the device to charge the battery. Then the battery-powered Bluetooth implant delivers programmable patterns of light to brain cells using an “easy-to-use” smartphone app for real-time brain control.

“This device can be operated anywhere and anytime to manipulate neural circuits, which makes it a highly versatile tool for investigating brain functions,” said lead author Choong Yeon Kim, a researcher at KAIST.

Neuroscientists successfully tested these implants in rats and demonstrated their ability to suppress cocaine-induced behaviour after the rats were injected with cocaine. This was achieved by precise light stimulation of relevant target neurons in their brains using the smartphone-controlled LEDs. Furthermore, the battery in the implants could be repeatedly recharged while the rats were behaving freely, thus minimizing any physical interruption to the experiments.

“Wireless battery re-charging makes experimental procedures much less complicated,” said the co-lead author Min Jeong Ku, a researcher at Yonsei University’s College of Medicine.

“The fact that we can control a specific behaviour of animals, by delivering light stimulation into the brain just with a simple manipulation of smartphone app, watching freely moving animals nearby, is very interesting and stimulates a lot of imagination,” said Jeong-Hoon Kim, a professor of physiology at Yonsei University’s College of Medicine. “This technology will facilitate various avenues of brain research.”

The researchers believe this brain implant technology may lead to new opportunities for brain research and therapeutic intervention to treat diseases in the brain and other organs.

This work was supported by grants from the National Research Foundation of Korea and the KAIST Global Singularity Research Program.

-Profile

Professor Jae-Woong Jeong

https://www.jeongresearch.org/

School of Electrical Engineering

KAIST

2021.01.26 View 28290 -

Deep Learning Helps Explore the Structural and Strategic Bases of Autism

Psychiatrists typically diagnose autism spectrum disorders (ASD) by observing a person’s behavior and by leaning on the Diagnostic and Statistical Manual of Mental Disorders (DSM-5), widely considered the “bible” of mental health diagnosis.

However, there are substantial differences amongst individuals on the spectrum and a great deal remains unknown by science about the causes of autism, or even what autism is. As a result, an accurate diagnosis of ASD and a prognosis prediction for patients can be extremely difficult.

But what if artificial intelligence (AI) could help? Deep learning, a type of AI, deploys artificial neural networks based on the human brain to recognize patterns in a way that is akin to, and in some cases can surpass, human ability. The technique, or rather suite of techniques, has enjoyed remarkable success in recent years in fields as diverse as voice recognition, translation, autonomous vehicles, and drug discovery.

A group of researchers from KAIST in collaboration with the Yonsei University College of Medicine has applied these deep learning techniques to autism diagnosis. Their findings were published on August 14 in the journal IEEE Access.

Magnetic resonance imaging (MRI) scans of brains of people known to have autism have been used by researchers and clinicians to try to identify structures of the brain they believed were associated with ASD. These researchers have achieved considerable success in identifying abnormal grey and white matter volume and irregularities in cerebral cortex activation and connections as being associated with the condition.

These findings have subsequently been deployed in studies attempting more consistent diagnoses of patients than has been achieved via psychiatrist observations during counseling sessions. While such studies have reported high levels of diagnostic accuracy, the number of participants in these studies has been small, often under 50, and diagnostic performance drops markedly when applied to large sample sizes or on datasets that include people from a wide variety of populations and locations.

“There was something as to what defines autism that human researchers and clinicians must have been overlooking,” said Keun-Ah Cheon, one of the two corresponding authors and a professor in Department of Child and Adolescent Psychiatry at Severance Hospital of the Yonsei University College of Medicine.

“And humans poring over thousands of MRI scans won’t be able to pick up on what we’ve been missing,” she continued. “But we thought AI might be able to.”

So the team applied five different categories of deep learning models to an open-source dataset of more than 1,000 MRI scans from the Autism Brain Imaging Data Exchange (ABIDE) initiative, which has collected brain imaging data from laboratories around the world, and to a smaller, but higher-resolution MRI image dataset (84 images) taken from the Child Psychiatric Clinic at Severance Hospital, Yonsei University College of Medicine. In both cases, the researchers used both structural MRIs (examining the anatomy of the brain) and functional MRIs (examining brain activity in different regions).

The models allowed the team to explore the structural bases of ASD brain region by brain region, focusing in particular on many structures below the cerebral cortex, including the basal ganglia, which are involved in motor function (movement) as well as learning and memory.

Crucially, these specific types of deep learning models also offered up possible explanations of how the AI had come up with its rationale for these findings.

“Understanding the way that the AI has classified these brain structures and dynamics is extremely important,” said Sang Wan Lee, the other corresponding author and an associate professor at KAIST. “It’s no good if a doctor can tell a patient that the computer says they have autism, but not be able to say why the computer knows that.”

The deep learning models were also able to describe how much a particular aspect contributed to ASD, an analysis tool that can assist psychiatric physicians during the diagnosis process to identify the severity of the autism.

“Doctors should be able to use this to offer a personalized diagnosis for patients, including a prognosis of how the condition could develop,” Lee said.

“Artificial intelligence is not going to put psychiatrists out of a job,” he explained. “But using AI as a tool should enable doctors to better understand and diagnose complex disorders than they could do on their own.”

-ProfileProfessor Sang Wan LeeDepartment of Bio and Brain EngineeringLaboratory for Brain and Machine Intelligence https://aibrain.kaist.ac.kr/

KAIST

2020.09.23 View 13057

Deep Learning Helps Explore the Structural and Strategic Bases of Autism

Psychiatrists typically diagnose autism spectrum disorders (ASD) by observing a person’s behavior and by leaning on the Diagnostic and Statistical Manual of Mental Disorders (DSM-5), widely considered the “bible” of mental health diagnosis.

However, there are substantial differences amongst individuals on the spectrum and a great deal remains unknown by science about the causes of autism, or even what autism is. As a result, an accurate diagnosis of ASD and a prognosis prediction for patients can be extremely difficult.

But what if artificial intelligence (AI) could help? Deep learning, a type of AI, deploys artificial neural networks based on the human brain to recognize patterns in a way that is akin to, and in some cases can surpass, human ability. The technique, or rather suite of techniques, has enjoyed remarkable success in recent years in fields as diverse as voice recognition, translation, autonomous vehicles, and drug discovery.

A group of researchers from KAIST in collaboration with the Yonsei University College of Medicine has applied these deep learning techniques to autism diagnosis. Their findings were published on August 14 in the journal IEEE Access.

Magnetic resonance imaging (MRI) scans of brains of people known to have autism have been used by researchers and clinicians to try to identify structures of the brain they believed were associated with ASD. These researchers have achieved considerable success in identifying abnormal grey and white matter volume and irregularities in cerebral cortex activation and connections as being associated with the condition.

These findings have subsequently been deployed in studies attempting more consistent diagnoses of patients than has been achieved via psychiatrist observations during counseling sessions. While such studies have reported high levels of diagnostic accuracy, the number of participants in these studies has been small, often under 50, and diagnostic performance drops markedly when applied to large sample sizes or on datasets that include people from a wide variety of populations and locations.

“There was something as to what defines autism that human researchers and clinicians must have been overlooking,” said Keun-Ah Cheon, one of the two corresponding authors and a professor in Department of Child and Adolescent Psychiatry at Severance Hospital of the Yonsei University College of Medicine.

“And humans poring over thousands of MRI scans won’t be able to pick up on what we’ve been missing,” she continued. “But we thought AI might be able to.”

So the team applied five different categories of deep learning models to an open-source dataset of more than 1,000 MRI scans from the Autism Brain Imaging Data Exchange (ABIDE) initiative, which has collected brain imaging data from laboratories around the world, and to a smaller, but higher-resolution MRI image dataset (84 images) taken from the Child Psychiatric Clinic at Severance Hospital, Yonsei University College of Medicine. In both cases, the researchers used both structural MRIs (examining the anatomy of the brain) and functional MRIs (examining brain activity in different regions).

The models allowed the team to explore the structural bases of ASD brain region by brain region, focusing in particular on many structures below the cerebral cortex, including the basal ganglia, which are involved in motor function (movement) as well as learning and memory.

Crucially, these specific types of deep learning models also offered up possible explanations of how the AI had come up with its rationale for these findings.

“Understanding the way that the AI has classified these brain structures and dynamics is extremely important,” said Sang Wan Lee, the other corresponding author and an associate professor at KAIST. “It’s no good if a doctor can tell a patient that the computer says they have autism, but not be able to say why the computer knows that.”

The deep learning models were also able to describe how much a particular aspect contributed to ASD, an analysis tool that can assist psychiatric physicians during the diagnosis process to identify the severity of the autism.

“Doctors should be able to use this to offer a personalized diagnosis for patients, including a prognosis of how the condition could develop,” Lee said.

“Artificial intelligence is not going to put psychiatrists out of a job,” he explained. “But using AI as a tool should enable doctors to better understand and diagnose complex disorders than they could do on their own.”

-ProfileProfessor Sang Wan LeeDepartment of Bio and Brain EngineeringLaboratory for Brain and Machine Intelligence https://aibrain.kaist.ac.kr/

KAIST

2020.09.23 View 13057 -

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 15515

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 15515 -

Deep Learning-Based Cough Recognition Model Helps Detect the Location of Coughing Sounds in Real Time

The Center for Noise and Vibration Control at KAIST announced that their coughing detection camera recognizes where coughing happens, visualizing the locations. The resulting cough recognition camera can track and record information about the person who coughed, their location, and the number of coughs on a real-time basis.

Professor Yong-Hwa Park from the Department of Mechanical Engineering developed a deep learning-based cough recognition model to classify a coughing sound in real time. The coughing event classification model is combined with a sound camera that visualizes their locations in public places. The research team said they achieved a best test accuracy of 87.4 %.

Professor Park said that it will be useful medical equipment during epidemics in public places such as schools, offices, and restaurants, and to constantly monitor patients’ conditions in a hospital room.

Fever and coughing are the most relevant respiratory disease symptoms, among which fever can be recognized remotely using thermal cameras. This new technology is expected to be very helpful for detecting epidemic transmissions in a non-contact way. The cough event classification model is combined with a sound camera that visualizes the cough event and indicates the location in the video image.

To develop a cough recognition model, a supervised learning was conducted with a convolutional neural network (CNN). The model performs binary classification with an input of a one-second sound profile feature, generating output to be either a cough event or something else.

In the training and evaluation, various datasets were collected from Audioset, DEMAND, ETSI, and TIMIT. Coughing and others sounds were extracted from Audioset, and the rest of the datasets were used as background noises for data augmentation so that this model could be generalized for various background noises in public places.

The dataset was augmented by mixing coughing sounds and other sounds from Audioset and background noises with the ratio of 0.15 to 0.75, then the overall volume was adjusted to 0.25 to 1.0 times to generalize the model for various distances.

The training and evaluation datasets were constructed by dividing the augmented dataset by 9:1, and the test dataset was recorded separately in a real office environment.

In the optimization procedure of the network model, training was conducted with various combinations of five acoustic features including spectrogram, Mel-scaled spectrogram and Mel-frequency cepstrum coefficients with seven optimizers. The performance of each combination was compared with the test dataset. The best test accuracy of 87.4% was achieved with Mel-scaled Spectrogram as the acoustic feature and ASGD as the optimizer.

The trained cough recognition model was combined with a sound camera. The sound camera is composed of a microphone array and a camera module. A beamforming process is applied to a collected set of acoustic data to find out the direction of incoming sound source. The integrated cough recognition model determines whether the sound is cough or not. If it is, the location of cough is visualized as a contour image with a ‘cough’ label at the location of the coughing sound source in a video image.

A pilot test of the cough recognition camera in an office environment shows that it successfully distinguishes cough events and other events even in a noisy environment. In addition, it can track the location of the person who coughed and count the number of coughs in real time. The performance will be improved further with additional training data obtained from other real environments such as hospitals and classrooms.

Professor Park said, “In a pandemic situation like we are experiencing with COVID-19, a cough detection camera can contribute to the prevention and early detection of epidemics in public places. Especially when applied to a hospital room, the patient's condition can be tracked 24 hours a day and support more accurate diagnoses while reducing the effort of the medical staff."

This study was conducted in collaboration with SM Instruments Inc.

Profile: Yong-Hwa Park, Ph.D.

Associate Professor

yhpark@kaist.ac.kr

http://human.kaist.ac.kr/

Human-Machine Interaction Laboratory (HuMaN Lab.)

Department of Mechanical Engineering (ME)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr/en/

Daejeon 34141, Korea

Profile: Gyeong Tae Lee

PhD Candidate

hansaram@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Seong Hu Kim

PhD Candidate

tjdgnkim@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Hyeonuk Nam

PhD Candidate

frednam@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Young-Key Kim

CEO

sales@smins.co.kr

http://en.smins.co.kr/

SM Instruments Inc.

Daejeon 34109, Korea

(END)

2020.08.13 View 19153

Deep Learning-Based Cough Recognition Model Helps Detect the Location of Coughing Sounds in Real Time

The Center for Noise and Vibration Control at KAIST announced that their coughing detection camera recognizes where coughing happens, visualizing the locations. The resulting cough recognition camera can track and record information about the person who coughed, their location, and the number of coughs on a real-time basis.

Professor Yong-Hwa Park from the Department of Mechanical Engineering developed a deep learning-based cough recognition model to classify a coughing sound in real time. The coughing event classification model is combined with a sound camera that visualizes their locations in public places. The research team said they achieved a best test accuracy of 87.4 %.

Professor Park said that it will be useful medical equipment during epidemics in public places such as schools, offices, and restaurants, and to constantly monitor patients’ conditions in a hospital room.

Fever and coughing are the most relevant respiratory disease symptoms, among which fever can be recognized remotely using thermal cameras. This new technology is expected to be very helpful for detecting epidemic transmissions in a non-contact way. The cough event classification model is combined with a sound camera that visualizes the cough event and indicates the location in the video image.

To develop a cough recognition model, a supervised learning was conducted with a convolutional neural network (CNN). The model performs binary classification with an input of a one-second sound profile feature, generating output to be either a cough event or something else.

In the training and evaluation, various datasets were collected from Audioset, DEMAND, ETSI, and TIMIT. Coughing and others sounds were extracted from Audioset, and the rest of the datasets were used as background noises for data augmentation so that this model could be generalized for various background noises in public places.

The dataset was augmented by mixing coughing sounds and other sounds from Audioset and background noises with the ratio of 0.15 to 0.75, then the overall volume was adjusted to 0.25 to 1.0 times to generalize the model for various distances.

The training and evaluation datasets were constructed by dividing the augmented dataset by 9:1, and the test dataset was recorded separately in a real office environment.

In the optimization procedure of the network model, training was conducted with various combinations of five acoustic features including spectrogram, Mel-scaled spectrogram and Mel-frequency cepstrum coefficients with seven optimizers. The performance of each combination was compared with the test dataset. The best test accuracy of 87.4% was achieved with Mel-scaled Spectrogram as the acoustic feature and ASGD as the optimizer.

The trained cough recognition model was combined with a sound camera. The sound camera is composed of a microphone array and a camera module. A beamforming process is applied to a collected set of acoustic data to find out the direction of incoming sound source. The integrated cough recognition model determines whether the sound is cough or not. If it is, the location of cough is visualized as a contour image with a ‘cough’ label at the location of the coughing sound source in a video image.

A pilot test of the cough recognition camera in an office environment shows that it successfully distinguishes cough events and other events even in a noisy environment. In addition, it can track the location of the person who coughed and count the number of coughs in real time. The performance will be improved further with additional training data obtained from other real environments such as hospitals and classrooms.

Professor Park said, “In a pandemic situation like we are experiencing with COVID-19, a cough detection camera can contribute to the prevention and early detection of epidemics in public places. Especially when applied to a hospital room, the patient's condition can be tracked 24 hours a day and support more accurate diagnoses while reducing the effort of the medical staff."

This study was conducted in collaboration with SM Instruments Inc.

Profile: Yong-Hwa Park, Ph.D.

Associate Professor

yhpark@kaist.ac.kr

http://human.kaist.ac.kr/

Human-Machine Interaction Laboratory (HuMaN Lab.)

Department of Mechanical Engineering (ME)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr/en/

Daejeon 34141, Korea

Profile: Gyeong Tae Lee

PhD Candidate

hansaram@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Seong Hu Kim

PhD Candidate

tjdgnkim@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Hyeonuk Nam

PhD Candidate

frednam@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Young-Key Kim

CEO

sales@smins.co.kr

http://en.smins.co.kr/

SM Instruments Inc.

Daejeon 34109, Korea

(END)

2020.08.13 View 19153 -

Hydrogel-Based Flexible Brain-Machine Interface

The interface is easy to insert into the body when dry, but behaves ‘stealthily’ inside the brain when wet

Professor Seongjun Park’s research team and collaborators revealed a newly developed hydrogel-based flexible brain-machine interface. To study the structure of the brain or to identify and treat neurological diseases, it is crucial to develop an interface that can stimulate the brain and detect its signals in real time. However, existing neural interfaces are mechanically and chemically different from real brain tissue. This causes foreign body response and forms an insulating layer (glial scar) around the interface, which shortens its lifespan.

To solve this problem, the research team developed a ‘brain-mimicking interface’ by inserting a custom-made multifunctional fiber bundle into the hydrogel body. The device is composed not only of an optical fiber that controls specific nerve cells with light in order to perform optogenetic procedures, but it also has an electrode bundle to read brain signals and a microfluidic channel to deliver drugs to the brain.

The interface is easy to insert into the body when dry, as hydrogels become solid. But once in the body, the hydrogel will quickly absorb body fluids and resemble the properties of its surrounding tissues, thereby minimizing foreign body response.

The research team applied the device on animal models, and showed that it was possible to detect neural signals for up to six months, which is far beyond what had been previously recorded. It was also possible to conduct long-term optogenetic and behavioral experiments on freely moving mice with a significant reduction in foreign body responses such as glial and immunological activation compared to existing devices.